Responsible AI as a feature, not a footer

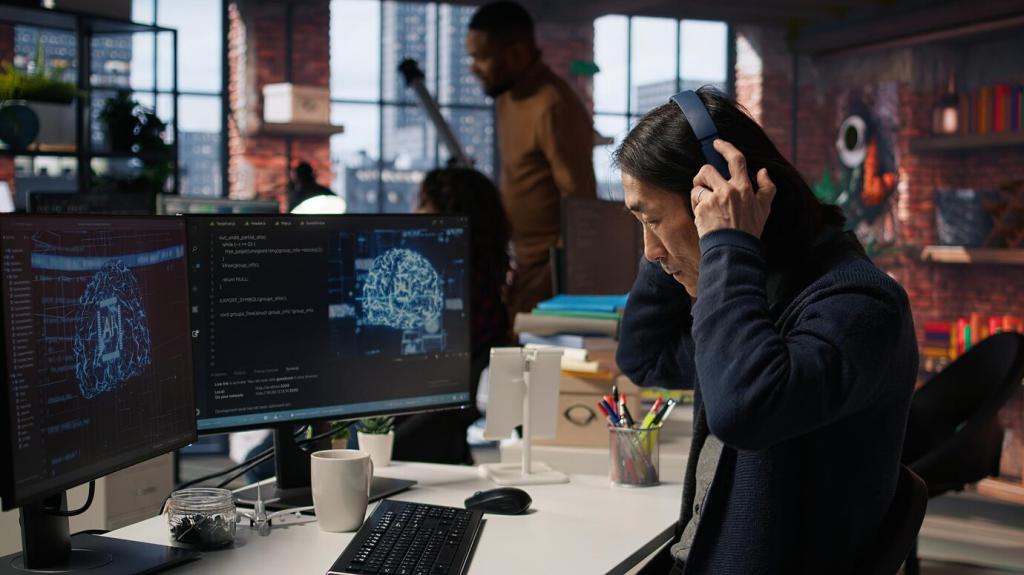

Bias hides in defaults. Measure with representative datasets, involve impacted voices, and publish limitations. A recruiting tool paused a rollout after discovering under-referrals for career switchers. How are you testing fairness today, and which metric or story most challenged your assumptions so far?

Responsible AI as a feature, not a footer

Explainability builds confidence. Offer inline reasons, examples, and controls users can tweak. A customer renewed after a transparent walkthrough demystified a tricky recommendation. Share the clearest explanation you provide today and what users still tell you feels mysterious or unpredictable in practice.